UIST 2025 PDF

At A Glance To Your Fingertips: Enabling Direct Manipulation for Distant Objects Through Gaze-based Summoning

Yang Liu, Thorbjørn Mikkelsen, Zehai Liu, Gengchen Tian, Diako Mardanbegi, Qiushi Zhou, Hans Gellersen, Ken Pfeuffer

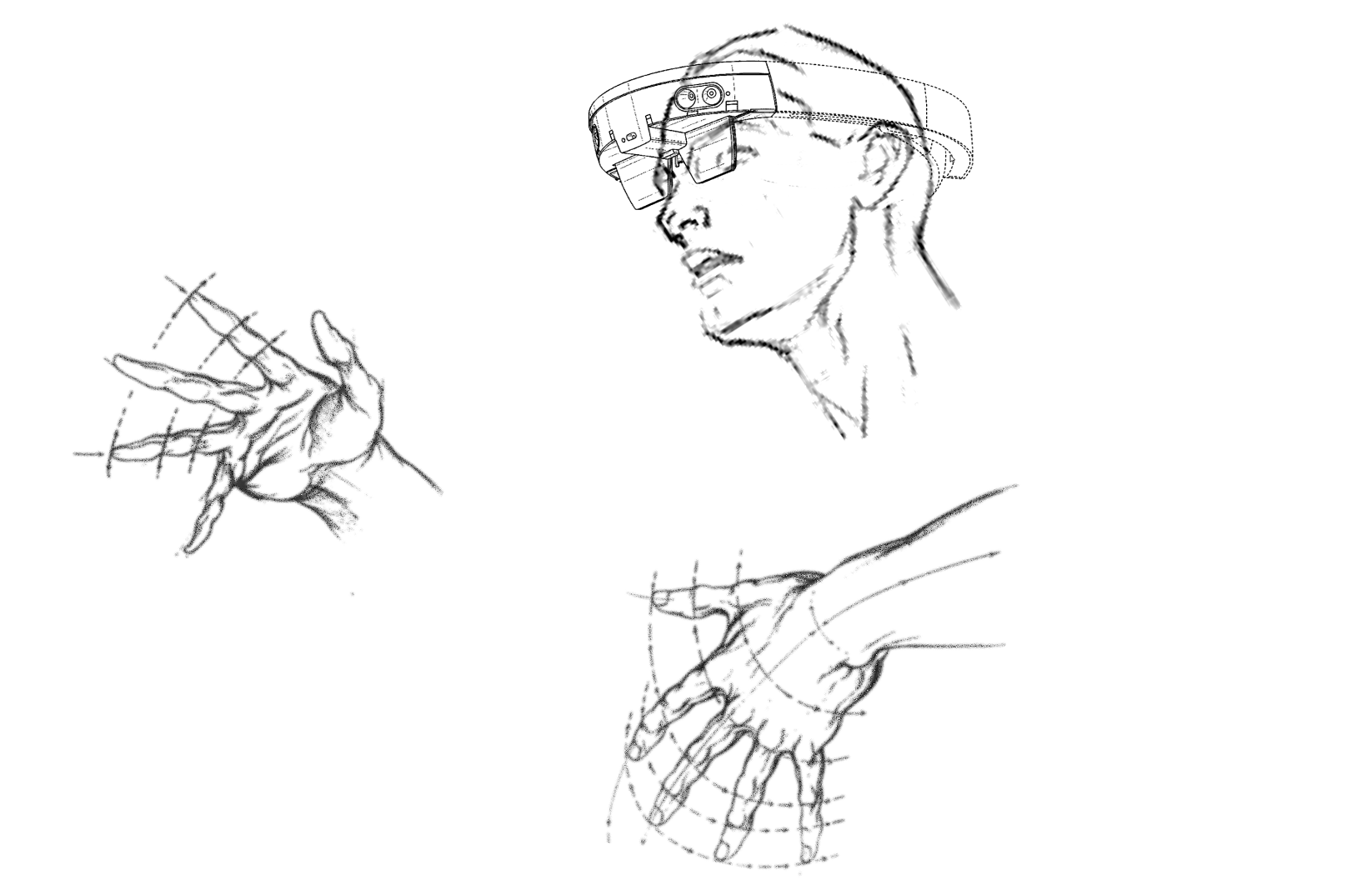

In 3D interfaces, people commonly manipulate objects over distance. This work introduces SightWarp an interaction technique that leverages eye-hand coordination to instantiate object proxies directly at the user’s fingertips. After looking at a remote object, users either shift their gaze to the hand or move their hand into view—triggering the creation of a near-space proxy of the object and its surrounding context. The proxy remains active until the eye–hand pattern is released. The main benefit is that users always have an option to immediately operate on the object through a natural, direct hand gesture. Through a user study of a 3D object docking task, we show that users can easily perform proxy summoning, and that subsequent direct manipulation improves performance over standard Gaze+Pinch interaction. We demonstrate how \name complements Gaze+Pinch, enabling a seamless switch between direct and indirect modes at a distance. Application examples illustrate its utility for tasks like cross-space manipulation, overview and detail, and interacting with world-in-miniatures. Our work contributes to more expressive and flexible object interaction across near and far spaces.

CHI 2025 PDF

PinchCatcher: Enabling Multi-selection for Gaze+Pinch

Jinwook Kim, Sangmin Park, Qiushi Zhou, Mar Gonzalez-Franco, Jeongmi Lee, Ken Pfeuffer

This paper investigates multi-selection in XR interfaces based on eye and hand interaction. We propose enabling multi-selection using different variations of techniques that combine gaze with a semi-pinch gesture, allowing users to select multiple objects, while on the way to a full-pinch. While our exploration is based on the semi-pinch mode for activating a quasi-mode, we explore four methods for confirming subselections in multi-selection mode, varying in effort and complexity: dwell-time (SemiDwell), swipe (SemiSwipe), tilt (SemiTilt), and non-dominant hand input (SemiNDH), and compare them to a baseline technique. In the user study, we evaluate their effectiveness in reducing task completion time, errors, and effort. The results indicate the strengths and weaknesses of each technique, with SemiSwipe and SemiDwell as the most preferred methods by participants. We also demonstrate their utility in file managing and RTS gaming application scenarios. This study provides valuable insights to advance 3D input systems in XR.

HRI 2025 PDF

Assisting MoCap-Based Teleoperation of Robot Arm using Augmented Reality Visualisations

Qiushi Zhou, Antony Chacon, Jiahe Pan, Wafa Johal

Teleoperating a robot arm involves the human operator positioning the robot’s end-effector or programming each joint. Whereas humans can control their own arms easily by integrating visual and proprioceptive feedback, it is challenging to control an external robot arm in the same way, due to its inconsistent orientation and appearance. We explore teleoperating a robot arm through motion-capture (MoCap) of the human operator’s arm with the assistance of augmented reality (AR) visualisations. We investigate how AR helps teleoperation by visualising a virtual reference of the human arm alongside the robot arm to help users understand the movement mapping. We found that the AR overlay of a humanoid arm on the robot in the same orientation helped users learn the control. We discuss findings and future work on MoCap-based robot teleoperation.

CHI 2024 PDF

The Effects of Generative AI on Design Fixation and Divergent Thinking

Samangi Wadinambiarachchi, Ryan M. Kelly, Saumya Pareek, Qiushi Zhou, Eduardo Velloso

Generative AI systems have been heralded as tools for augmenting human creativity and inspiring divergent thinking, though with little empirical evidence for these claims. This paper explores the effects of exposure to AI-generated images on measures of design fixation and divergent thinking in a visual ideation task. Through a between-participants experiment (N=60), we found that support from an AI image generator during ideation leads to higher fixation on an initial example. Participants who used AI produced fewer ideas, with less variety and lower originality compared to a baseline. Our qualitative analysis suggests that the effectiveness of co-ideation with AI rests on participants’ chosen approach to prompt creation and on the strategies used by participants to generate ideas in response to the AI’s suggestions. We discuss opportunities for designing generative AI systems for ideation support and incorporating these AI tools into ideation workflows.

CHI 2024 PDF

Augmented Reality at Zoo Exhibits: A Design Framework for Enhancing the Zoo Experience

Brandon Syiem, Sarah Webber, Ryan Kelly, Qiushi Zhou, Jorge Goncalves, Eduardo Velloso

Augmented Reality (AR) offers unique opportunities for contributing to zoos’ objectives of public engagement and education about animal and conservation issues. However, the diversity of animal exhibits pose challenges in designing AR applications that are not encountered in more controlled environments, such as museums. To support the design of AR applications that meaningfully engage the public with zoo objectives, we first conducted two scoping reviews to interrogate previous work on AR and broader technology use at zoos. We then conducted a workshop with zoo representatives to understand the challenges and opportunities in using AR to achieve zoo objectives. Additionally, we conducted a field trip to a public zoo to identify exhibit characteristics that impacts AR application design. We synthesise the findings from these studies into a framework that enables the design of diverse AR experiences. We illustrate the utility of the framework by presenting two concepts for feasible AR applications.

IMWUT 2023 PDF

Reflected Reality: Augmented Reality through the Mirror

Qiushi Zhou, Brandon Victor Syiem, Beier Li, Jorge Goncalves, Eduardo Velloso

We propose Reflected Reality: a new dimension for augmented reality that expands the augmented physical space into mirror reflections. By synchronously tracking the physical space in front of the mirror and the reflection behind it using an AR headset and an optional smart mirror component, reflected reality enables novel AR interactions that allow users to use their physical and reflected bodies to find and interact with virtual objects. We propose a design space for AR interaction with mirror reflections, and instantiate it using a prototype system featuring a HoloLens 2 and a smart mirror. We explore the design space along the following dimensions: the user’s perspective of input, the spatial frame of reference, and the direction of the mirror space relative to the physical space. Using our prototype, we visualise a use case scenario that traverses the design space to demonstrate its interaction affordances in a practical context. To understand how users perceive the intuitiveness and ease of reflected reality interaction, we conducted an exploratory and a formal user evaluation studies to characterise user performance of AR interaction tasks in reflected reality. We discuss the unique interaction affordances that reflected reality offers, and outline possibilities of its future applications.

INTERACT 2023 PDF

“Hello, Fellow Villager!”: Perceptions and Impact of Displaying Users’ Locations on Weibo

Ying Ma, Qiushi Zhou, Benjamin Tag, Zhanna Sarsenbayeva, Jarrod Knibbe, Jorge Goncalves

In April 2022, Sina Weibo began to display users’ coarse location for the stated purpose of regulating their online community. However, this raised concerns about location privacy. Through sentiment analysis and Latent Dirichlet Allocation (LDA), we analysed the users’ attitudes and opinions on this topic across 20,162 related posts and comments. We labelled 300 users as either supportive, critical, or neutral towards the feature, and captured their posting behaviour two months prior and two months post the launch. Our analysis elicits three major themes in the public discussion: online community atmosphere, privacy issues, and equity in the application of the feature, and shows that most people expressed a negative attitude. We find a drop in activity by objectors during the first month after the launch of the feature before gradually resuming. This work provides a large-scale firsthand account of people’s attitudes and opinions towards online location privacy on social media platforms.

CHI 2023 PDF

Here and Now: Creating Improvisational Dance Movements with a Mixed Reality Mirror

Qiushi Zhou, Louise Grebel, Andrew Irlitti, Julie Ann Minaai, Jorge Goncalves, Eduardo Velloso

This paper explores using mixed reality (MR) mirrors for supporting improvisational dance making. Motivated by the prevalence of mirrors in dance studios and inspired by Forsythe’s Improvisation Technologies, we conducted workshops with 13 dancers and choreographers to inform the design of future MR visualisation and annotation tools for dance. The workshops involved using a prototype MR mirror as a technology probe that reveals the spatial and temporal relationships between the reflected dancing body and its surroundings during improvisation; speed dating group interviews around future design ideas; follow-up surveys and extended interviews with a digital media dance artist and a dance educator. Our findings highlight how the MR mirror enriches dancers’ temporal and spatial perception, creates multi-layered presence, and affords appropriation by dancers. We also discuss the unique place of MR mirrors in the theoretical context of dance and in the history of movement visualisation, and distil lessons for broader HCI research.

CHI 2023 PDF

Volumetric Mixed Reality Telepresence for Real-time Cross Modality Collaboration

Andrew Irlitti, Mesut Latifoglu, Qiushi Zhou, Martin Reinoso, Eduardo Velloso, Thuong Hoang, Frank Vetere

Mixed-reality telepresence allows local and remote users feel as if they are present together in the same space. In this paper we report on a mixed-reality volumetric telepresence system that is adaptable, multi-user and cross-modal, i.e. combining augmented and virtual reality technologies with face-to-face interactions. The system extends state-of-art by creating full-body and environmental volumetric renderings in real-time over local enterprise networks. We report findings of an evaluation in a training scenario which was adapted for remote delivery and led by an industry professional. Analysis of interviews and observed behaviours identify varying attitudes towards virtually mediated full-body experiences and highlight the impact of volumetric mixed-reality telepresence to facilitate personal experiences of co-presence and to ground communication with interlocutors.

ISMAR 2022 PDF

Blending On-Body and Mid-Air Interaction in Virtual Reality

Difeng Yu, Qiushi Zhou, Tilman Dingler, Eduardo Velloso, Jorge Goncalves

On-body interfaces, which leverage the human body’s surface as an input or output platform, can provide new opportunities for designing VR interaction. However, it remains unclear how on-body interfaces can best support current VR systems that mainly rely on mid-air interaction. We propose BodyOn, a collection of six design patterns that leverage combined on-body and mid-air interfaces to achieve more effective 3D interaction. Specifically, a user may use thumb-on-finger gestures, finger-on-arm gestures, or on-body displays with mid-air input, including hand movement and orientation, to complete an interaction task. To test our design concepts, we implemented example interaction techniques based on BodyOn that can assist users in various 3D interaction tasks. We further conducted an expert evaluation using the techniques as probes to elicit immediate design issues that emerge from the novel combination of on-body and midair interaction. We provide insights that can inspire and inform the design of future 3D user interfaces.

DIS 2022 PDF

Movement Guidance using a Mixed Reality Mirror

Qiushi Zhou, Andrew Irlitti, Difeng Yu, Jorge Goncalves, Eduardo Velloso

Mirror reflections offer an intuitive and realistic Mixed Reality (MR) experience comparable to other MR interfaces. Their high visual fidelity, and the sensorimotor contingency from the reflected moving body, make the mirror an ideal instrument for MR movement guidance. The translucent two-way mirror display enables users to follow a virtual humanoid instructor’s movement accurately by visually matching it with their reflections. In this work, we conduct the first formal evaluation of movement acquisition performance with simple motor tasks, using visual guidance from an MR mirror and a humanoid virtual instructor. Our results of performance and subjective ratings indicate that, comparing with simulated virtual mirror and with traditional screen-based movement guidance, the real MR mirror yields better acquisition performance and stronger sense of embodiment with the reflection, for upper-body movement. But the benefits diminish with larger-range head movements. We provide design guidelines for future mirror movement guidance interfaces and MR mirror experiences at large.

🏅 CHI 2021 (Honourable Mention) PDF

Dance and Choreography in HCI: A Two-Decade Retrospective

Qiushi Zhou, Chengcheng Chua, Jarrod Knibbe, Jorge Goncalves, Eduardo Velloso

Designing computational support for dance is an emerging area of HCI research, incorporating the cultural, experiential, and embodied characteristics of the third-wave shift. The challenges of recognising the abstract qualities of body movement, and of mediating between the diverse parties involved in the idiosyncratic creative process, present important questions to HCI researchers: how can we effectively integrate computing with dance, to understand and cultivate the felt dimension of creativity, and to aid the dance-making process? In this work, we systematically review the past twenty years of dance literature in HCI. We discuss our findings, propose directions for future HCI works in dance, and distil lessons for related disciplines.

IEEE TVCG 2020 (ISMAR) PDF

Eyes-free Target Acquisition During Walking in Immersive Mixed Reality

Qiushi Zhou, Difeng Yu, Martin Reinoso, Joshua Newn, Jorge Goncalves, Eduardo Velloso

Reaching towards out-of-sight objects during walking is a common task in daily life, however the same task can be challenging when wearing immersive Head-Mounted Displays (HMD). In this paper, we investigate the effects of spatial reference frame, walking path curvature, and target placement relative to the body on user performance of manually acquiring out-of-sight targets located around their bodies, as they walk in a spatial-mapping Mixed Reality (MR) environment wearing an immersive HMD. We found that walking and increased path curvature negatively affected the overall spatial accuracy of the performance, and that the performance benefited more from using the torso as the reference frame than the head. We also found that targets placed at maximum reaching distance yielded less error in angular rotation and depth of the reaching arm. We discuss our findings with regard to human walking kinesthetics and the sensory integration in the peripersonal space during locomotion in immersive MR. We provide design guidelines for future immersive MR experience featuring spatial mapping and full-body motion tracking to provide better embodied experience.

🏅 IEEE TVCG 2020 (ISMAR Best Paper Nomination) PDF

Fully-Occluded Target Selection in Virtual Reality

Difeng Yu, Qiushi Zhou, Joshua Newn, Tilman Dingler, Jorge Goncalves, Eduardo Velloso

The presence of fully-occluded targets is common within virtual environments, ranging from a virtual object located behind a wall to a datapoint of interest hidden in a complex visualization. However, efficient input techniques for locating and selecting these targets are mostly underexplored in virtual reality (VR) systems. In this paper, we developed an initial set of seven techniques techniques for fully-occluded target selection in VR. We then evaluated their performance in a user study and derived a set of design implications for simple and more complex tasks from our results. Based on these insights, we refined the most promising techniques and conducted a second, more comprehensive user study. Our results show how factors, such as occlusion layers, target depths, object densities, and the estimation of target locations, can affect technique performance. Our findings from both studies and distilled recommendations can inform the design of future VR systems that offer selections for fully-occluded targets.

CHI 2020 PDF

Faces of Focus: A Study on the Facial Cues of Attentional States

Ebrahim Babaei, Namrata Srivastava, Joshua Newn, Qiushi Zhou, Tilman Dingler, Eduardo Velloso

Automatically detecting attentional states is a prerequisite for designing interventions to manage attention—knowledge workers’ most critical resource. As a first step towards this goal, it is necessary to understand how different attentional states are made discernible through visible cues in knowledge workers. In this paper, we demonstrate the important facial cues to detect attentional states by evaluating a data set of 15 participants that we tracked over a whole workday, which included their challenge and engagement levels. Our evaluation shows that gaze, pitch, and lips part action units are indicators of engaged work; while pitch, gaze movements, gaze angle, and upper-lid raiser action units are indicators of challenging work. These findings reveal a significant relationship between facial cues and both engagement and challenge levels experienced by our tracked participants. Our work contributes to the design of future studies to detect attentional states based on facial cues.

IEEE VR 2020 PDF

Engaging Participants during Selection Studies in Virtual Reality

Difeng Yu, Qiushi Zhou, Benjamin Tag, Tilman Dingler, Eduardo Velloso, Jorge Goncalves

Selection studies are prevalent and indispensable for VR research. However, due to the tedious and repetitive nature of many such experiments, participants can become disengaged during the study, which is likely to impact the results and conclusions. In this work, we investigate participant disengagement in VR selection experiments and how this issue affects the outcomes. Moreover, we evaluate the usefulness of four engagement strategies to keep participants engaged during VR selection studies and investigate how they impact user performance when compared to a baseline condition with no engagement strategy. Based on our findings, we distill several design recommendations that can be useful for future VR selection studies or user tests in other domains that employ similar repetitive features.

IMWUT 2019 (UbiComp Workshop) PDF

Ubiquitous Smart Eyewear Interactions using Implicit Sensing and Unobtrusive Information Output

Qiushi Zhou, Joshua Newn, Benjamin Tag, Hao-Ping Lee, Chaofan Wang, Eduardo Velloso

Premature technology, privacy, intrusiveness, power consumption, and user habits are all factors potentially contributing to the lack of social acceptance of smart glasses. After investigating the recent development of commercial smart eyewear and its related research, we propose a design space for ubiquitous smart eyewear interactions while maximising interactivity with minimal obtrusiveness. We focus on implicit and explicit interactions enabled by the combination of miniature sensor technology, low-resolution display and simplistic interaction modalities. Additionally, we are presenting example applications outlining future development directions. Finally, we aim at raising the awareness of designing for ubiquitous eyewear with implicit sensing and unobtrusive information output abilities.

CHI 2019 (LBW) PDF

Cognitive Aid: Task Assistance Based On Mental Workload Estimation

Qiushi Zhou, Joshua Newn, Namrata Srivastava, Tilman Dingler, Jorge Goncalves, Eduardo Velloso

In this work, we evaluate the potential of using wearable non-contact (infrared) thermal sensors through a user study (N=12) to measure mental workload. Our results indicate the possibility of mental workload estimation through the temperature changes detected using the prototype as participants perform two task variants with increasing difficulty levels. While the sensor accuracy and the design of the prototype can be further improved, the prototype showed the potential of building AR-based systems with cognitive aid technology for ubiquitous task assistance from the changes in mental workload demands. As such, we demonstrate our next steps by integrating our prototype into an existing AR headset (i.e.~Microsoft HoloLens).

🏅 OzCHI 2017 (Honourable Mention) PDF

GazeGrip: Improving Mobile Device Accessibility with Gaze & Grip Interaction

Qiushi Zhou, Eduardo Velloso

Though modern tablet devices offer users high processing power in a compact form factor, interaction while holding them still presents problems, forcing the user to alternate the dominant hand between holding and touching the screen. In this paper, we explore how eye tracking can minimize this problem through GazeGrip—a prototype interactive system for a tablet that integrates eye tracking and back-of-device touch sensing. We propose a design space for potential interaction techniques that leverage the power of this combination, as well as prototype applications that instantiate it. Our preliminary results highlight as opportunities enabled by the system reduced fatigue while holding the device, minimal occlusion of the screen, and improved accuracy and precision in the interaction.